-

Notifications

You must be signed in to change notification settings - Fork 907

[pool-2-thread-1] WARN CoreNLP - java.lang.RuntimeException: Ate the whole text without matching. Expected is ' CD-SS3.4', ate 'CD-SS3.4a' #1052

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

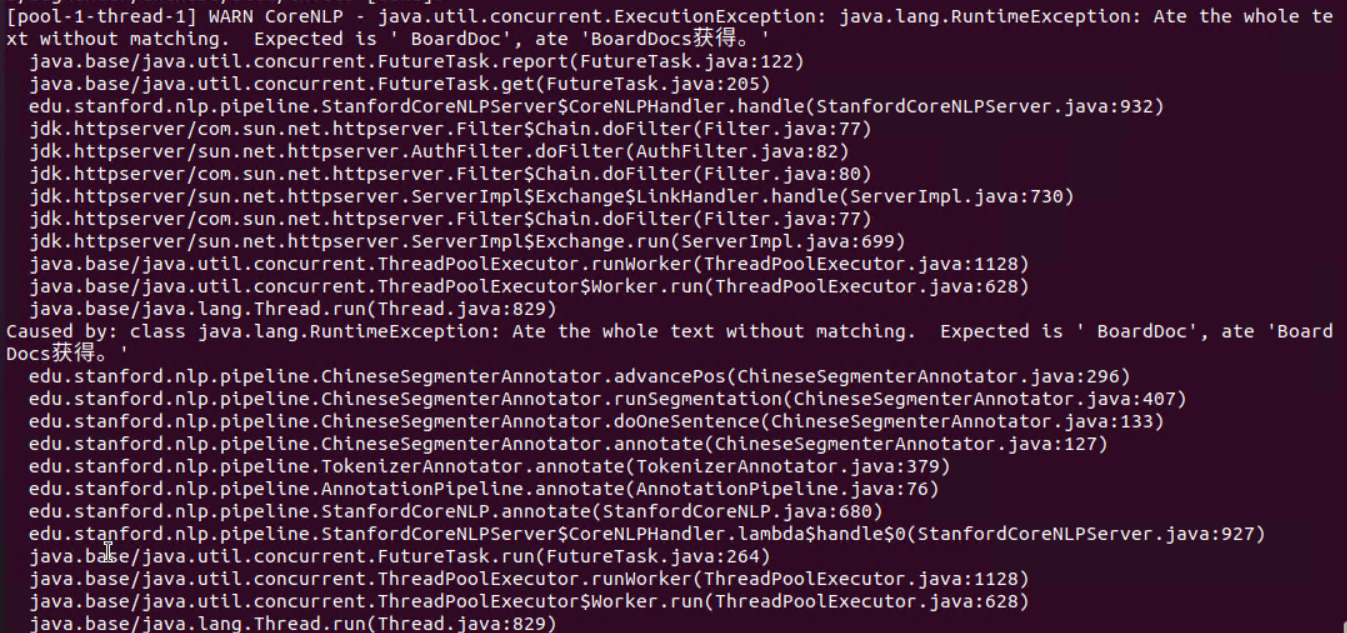

And in some cases, the warning message on the server is something like the following: |

|

It seems that the server would choke on and complain about certains strings, such as the following Chinese one, which also contains an ASCII string: You can test it on: https://corenlp.run/ by selecting Chinese as the language. |

|

Found it. Debugging these things is a giant PITA, by the way. Obviously it needs to be fixed, though, so thanks for pointing it out. Also, I learned that our segmenter doesn't properly keep 蓝妹妹 as a single word. It's probably for the best, considering the test cases I was giving it. If you can replace NBSP with spaces for a few days, I'll try to get out a new release next week. We can't do it this week because our PI wants to make some upgrades to the tokenizer and Stanford is on fire. |

|

It has since come to my attention that the Chinese segmentation standard may very well split last name from first name, and |

|

A CoreNLP release with this fix has been made, so it should no longer be a problem for Stanza |

|

I will dig into why this is happening a bit more, but at a high level, it

seems you are putting in NBSP instead of ascii spaces and the pipeline is

not properly handling that. Perhaps you could use regular spaces instead

while we come up with a more permanent solution.

…On Tue, Jun 21, 2022 at 12:28 AM jiangweiatgithub ***@***.***> wrote:

It seems that the server would choke on and complain about certains

strings, such as the following Chinese one, which also contains an ASCII

string:

讨论或批准的其他主题可通过 BoardDocs 获得。

The server message:

[image: image]

<https://user-images.githubusercontent.com/14370779/174741440-f05ec616-cae2-43d3-addd-5a1bc466e20d.png>

You can test it on: https://corenlp.run/ by selecting Chinese as the

language. The

—

Reply to this email directly, view it on GitHub

<#1052 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AA2AYWJ2LDO2SYVVZJYVRPDVQFVKRANCNFSM5ZIG2Q6A>

.

You are receiving this because you are subscribed to this thread.Message

ID: ***@***.***>

|

I started a server using the following command line in a Ubuntu hyper-v server on winserver 2016:

java -Xmx16g -cp "*" edu.stanford.nlp.pipeline.StanfordCoreNLPServer -serverProperties StanfordCoreNLP-chinese.properties -port 9009 -timeout 150000

When I try running the following python code:

requests.post('http://192.168.1.5:9009/tregex?pattern=NP|NN&filter=False&properties={"annotators":"tokenize,ssplit,pos,ner,depparse,parse","outputFormat":"json"}', data = {'data':tgt0}, headers={'Connection':'close'}).json()

The execution sticks there, and I see the following memssage on the server:

[main] INFO CoreNLP - --- StanfordCoreNLPServer#main() called ---

[main] INFO CoreNLP - Server default properties:

(Note: unspecified annotator properties are English defaults)

annotators = tokenize, ssplit, pos, lemma, ner, parse, coref

coref.algorithm = hybrid

coref.calculateFeatureImportance = false

coref.defaultPronounAgreement = true

coref.input.type = raw

coref.language = zh

coref.md.liberalChineseMD = false

coref.md.type = RULE

coref.path.word2vec =

coref.postprocessing = true

coref.print.md.log = false

coref.sieves = ChineseHeadMatch, ExactStringMatch, PreciseConstructs, StrictHeadMatch1, StrictHeadMatch2, StrictHeadMatch3, StrictHeadMatch4, PronounMatch

coref.useConstituencyTree = true

coref.useSemantics = false

coref.zh.dict = edu/stanford/nlp/models/dcoref/zh-attributes.txt.gz

depparse.language = chinese

depparse.model = edu/stanford/nlp/models/parser/nndep/UD_Chinese.gz

entitylink.wikidict = edu/stanford/nlp/models/kbp/chinese/wikidict_chinese.tsv.gz

inputFormat = text

kbp.language = zh

kbp.model = none

kbp.semgrex = edu/stanford/nlp/models/kbp/chinese/semgrex

kbp.tokensregex = edu/stanford/nlp/models/kbp/chinese/tokensregex

ner.applyNumericClassifiers = true

ner.fine.regexner.mapping = edu/stanford/nlp/models/kbp/chinese/gazetteers/cn_regexner_mapping.tab

ner.fine.regexner.noDefaultOverwriteLabels = CITY,COUNTRY,STATE_OR_PROVINCE

ner.language = chinese

ner.model = edu/stanford/nlp/models/ner/chinese.misc.distsim.crf.ser.gz

ner.useSUTime = false

outputFormat = json

parse.model = edu/stanford/nlp/models/srparser/chineseSR.ser.gz

pos.model = edu/stanford/nlp/models/pos-tagger/chinese-distsim.tagger

prettyPrint = false

segment.model = edu/stanford/nlp/models/segmenter/chinese/ctb.gz

segment.serDictionary = edu/stanford/nlp/models/segmenter/chinese/dict-chris6.ser.gz

segment.sighanCorporaDict = edu/stanford/nlp/models/segmenter/chinese

segment.sighanPostProcessing = true

ssplit.boundaryTokenRegex = [.。]|[!?!?]+

tokenize.language = zh

[main] INFO CoreNLP - Threads: 12

[main] INFO CoreNLP - Starting server...

[main] INFO CoreNLP - StanfordCoreNLPServer listening at /0:0:0:0:0:0:0:0:9009

[pool-2-thread-1] INFO edu.stanford.nlp.pipeline.StanfordCoreNLP - Adding annotator tokenize

[pool-2-thread-1] INFO edu.stanford.nlp.ie.AbstractSequenceClassifier - Loading classifier from edu/stanford/nlp/models/segmenter/chinese/ctb.gz ... done [21.0 sec].

[pool-2-thread-1] INFO edu.stanford.nlp.pipeline.StanfordCoreNLP - Adding annotator ssplit

[pool-2-thread-1] INFO edu.stanford.nlp.pipeline.StanfordCoreNLP - Adding annotator pos

[pool-2-thread-1] INFO edu.stanford.nlp.tagger.maxent.MaxentTagger - Loading POS tagger from edu/stanford/nlp/models/pos-tagger/chinese-distsim.tagger ... done [1.3 sec].

[pool-2-thread-1] INFO edu.stanford.nlp.pipeline.StanfordCoreNLP - Adding annotator depparse

[pool-2-thread-1] INFO edu.stanford.nlp.parser.nndep.DependencyParser - Loading depparse model: edu/stanford/nlp/models/parser/nndep/UD_Chinese.gz ... Time elapsed: 1.7 sec

[pool-2-thread-1] INFO edu.stanford.nlp.parser.nndep.Classifier - PreComputed 20000 vectors, elapsed Time: 2.301 sec

[pool-2-thread-1] INFO edu.stanford.nlp.parser.nndep.DependencyParser - Initializing dependency parser ... done [4.0 sec].

[pool-2-thread-1] INFO edu.stanford.nlp.pipeline.StanfordCoreNLP - Adding annotator parse

[pool-2-thread-1] INFO edu.stanford.nlp.parser.common.ParserGrammar - Loading parser from serialized file edu/stanford/nlp/models/srparser/chineseSR.ser.gz ... done [5.7 sec].

[pool-2-thread-1] INFO edu.stanford.nlp.pipeline.StanfordCoreNLP - Adding annotator lemma

[pool-2-thread-1] INFO edu.stanford.nlp.pipeline.StanfordCoreNLP - Adding annotator ner

[pool-2-thread-1] INFO edu.stanford.nlp.ie.AbstractSequenceClassifier - Loading classifier from edu/stanford/nlp/models/ner/chinese.misc.distsim.crf.ser.gz ... done [3.2 sec].

[pool-2-thread-1] INFO edu.stanford.nlp.pipeline.TokensRegexNERAnnotator - ner.fine.regexner: Read 21238 unique entries out of 21249 from edu/stanford/nlp/models/kbp/chinese/gazetteers/cn_regexner_mapping.tab, 0 TokensRegex patterns.

[pool-2-thread-1] INFO edu.stanford.nlp.pipeline.NERCombinerAnnotator - numeric classifiers: true; SUTime: false [no docDate]; fine grained: true

[pool-2-thread-1] INFO edu.stanford.nlp.wordseg.ChineseDictionary - Loading Chinese dictionaries from 1 file:

[pool-2-thread-1] INFO edu.stanford.nlp.wordseg.ChineseDictionary - edu/stanford/nlp/models/segmenter/chinese/dict-chris6.ser.gz

[pool-2-thread-1] INFO edu.stanford.nlp.wordseg.ChineseDictionary - Done. Unique words in ChineseDictionary is: 423200.

[pool-2-thread-1] INFO edu.stanford.nlp.wordseg.CorpusChar - Loading character dictionary file from edu/stanford/nlp/models/segmenter/chinese/dict/character_list [done].

[pool-2-thread-1] INFO edu.stanford.nlp.wordseg.AffixDictionary - Loading affix dictionary from edu/stanford/nlp/models/segmenter/chinese/dict/in.ctb [done].

[pool-2-thread-1] INFO edu.stanford.nlp.wordseg.CorpusChar - Loading character dictionary file from edu/stanford/nlp/models/segmenter/chinese/dict/character_list [done].

[pool-2-thread-1] INFO edu.stanford.nlp.wordseg.AffixDictionary - Loading affix dictionary from edu/stanford/nlp/models/segmenter/chinese/dict/in.ctb [done].

[pool-2-thread-1] WARN CoreNLP - java.lang.RuntimeException: Ate the whole text without matching. Expected is ' CD-SS3.4', ate 'CD-SS3.4a'

edu.stanford.nlp.pipeline.ChineseSegmenterAnnotator.advancePos(ChineseSegmenterAnnotator.java:296)

edu.stanford.nlp.pipeline.ChineseSegmenterAnnotator.runSegmentation(ChineseSegmenterAnnotator.java:407)

edu.stanford.nlp.pipeline.ChineseSegmenterAnnotator.doOneSentence(ChineseSegmenterAnnotator.java:133)

edu.stanford.nlp.pipeline.ChineseSegmenterAnnotator.annotate(ChineseSegmenterAnnotator.java:127)

edu.stanford.nlp.pipeline.TokenizerAnnotator.annotate(TokenizerAnnotator.java:379)

edu.stanford.nlp.pipeline.AnnotationPipeline.annotate(AnnotationPipeline.java:76)

edu.stanford.nlp.pipeline.StanfordCoreNLP.annotate(StanfordCoreNLP.java:680)

edu.stanford.nlp.pipeline.StanfordCoreNLPServer$TregexHandler.lambda$handle$7(StanfordCoreNLPServer.java:1332)

java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

java.base/java.lang.Thread.run(Thread.java:829)

The text was updated successfully, but these errors were encountered: